We can even pick single tags and print them separately. Here, each tag means something for example, “ PROPN” means proper noun, “ PUNC” means punctuation. Python is a very powerful language as it offers multiple modulesĪnd methods that are tailor made to perform various operations""" Load_capabilites = spacy.load("en_core_web_sm")ĭata_text = """Python programming can be used to perform numerous mathematical operations and provide solutions for different problems. We will iterate for a single word and then with the help of “word.pos_” we will perform PoS tagging for all the words. This Anadata will store all the words from the textual data for analysis in spacy. We loaded a particular package i.e., “en_core_web_sm”. We created a variable named “load_capabilites” that will initiate the “NLP”. We imported spacy after installing it on the command prompt. Firstly we will use PoS tagging and see how it functions − We will construct a program to segregate different parts of the speech using spaCy. The entire logic of lemmatization is to gather the base word for an inflected word. We can morphologically analyse the speech and target the words with inflected endings so that we can remove them. It is an integral tool of NLP and is used to categorize inflected words found in a speech. Lemmatization is the technique of grouping together terms or words of different versions that are the same word.

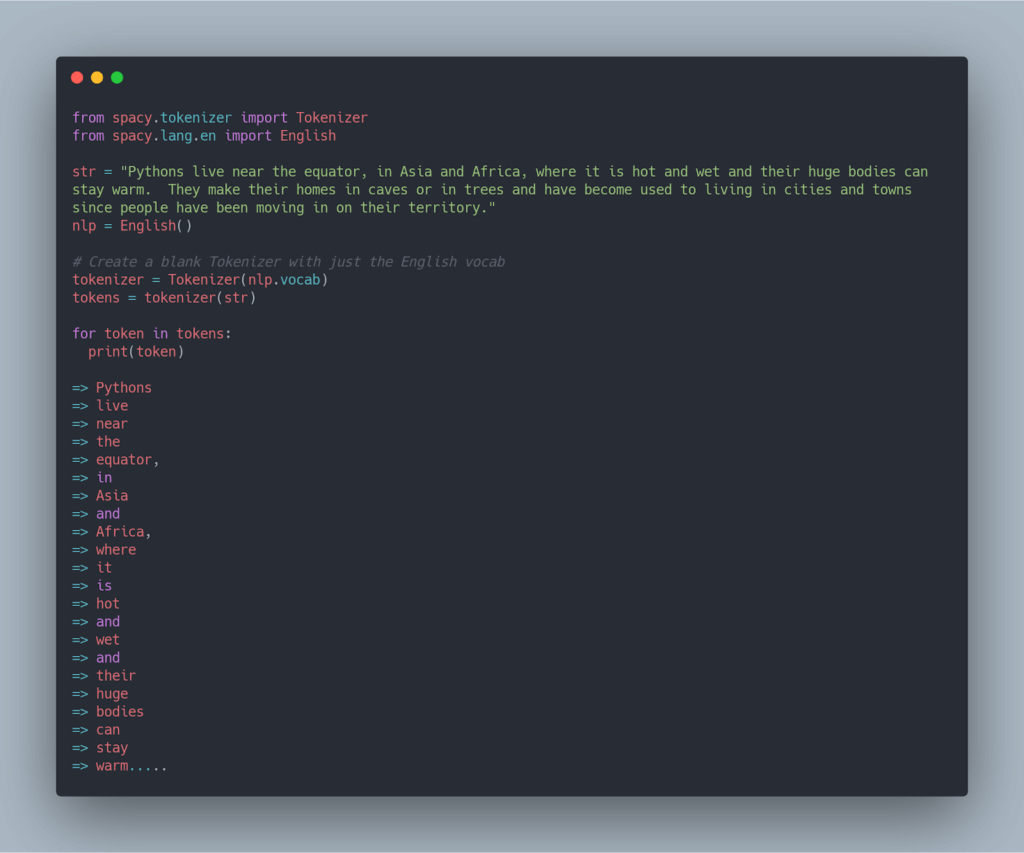

We can check which part of the speech is a verb, noun, pronoun, preposition etc. The passed dataset itself is deeply analysed. It also includes unknow words and modifies the vocabulary. We can grammatically check a speech and describe its structure. We can analyse each word and understand its context and lateral meanings. PoS (PART OF SPEECH) tagging is a technique of categorizing words in a textual data. So this convention loads the package that is in English language and its capabilities are PoS tagging and lemmatization and it is trained on written web text. “ en” decides the language, “ core” decides the capabilities, “ web” decides the genre and “ sm” decides the size. This naming convention decides what kind of pipeline package we want. For PoS tagging and lemmatization we will use − en_core_web_sm We will also load the pipeline package along by passing the correct naming convention. Once spaCy is installed we can import it on our IDE. SpaCy is installed with the help of “pip”. SpaCy is written in Cython and it provides interactive APIs. With the help of spaCy we process data at large scale and derive meaning for the machine. it paves the path for human-computer interaction by providing meaning to the human languages for machines. NLP itself is a conceptual field of artificial intelligence. It is managed by the Natural Language Processing (NLP). SpaCy is an open-source library used in deep learning. The second section will focus on the application of spaCy and the use of PoS tokening and lemmatization tokening. In the first section we will understand the significance of spaCy and discuss the concepts of PoS tagging and lemmatization. This article is divided into two sections − We will discuss about this library in detail but before we dive deep into the topic, let’s quickly go through the overview of this article and understand the itinerary. SpaCy is an open-source library and is used to analyse and compare textual data. In this article we will discuss about one such library known as “ spaCy”. It offers numerous libraries and modules that provides a magnificent platform for building useful techniques. The Flair issue tracker is available here.Python acts as an integral tool for understanding the concepts and application of machine learning and deep learning.

Spacy part of speech tagger download#

load the corpus (Ontonotes does not ship with Flair, you need to download and reformat into a column format yourself)Ĭolumn_format=,

The following Flair script was used to train this model: from flair.data import Corpusįrom flair.embeddings import WordEmbeddings, StackedEmbeddings, FlairEmbeddings So, the word " I" is labeled as a pronoun (PRP), " love" is labeled as a verb (VBP) and " Berlin" is labeled as a proper noun (NNP) in the sentence " I love Berlin". This yields the following output: Span : "I" # iterate over entities and print for entity in sentence.get_spans( 'pos'): Print( 'The following NER tags are found:') Tagger = SequenceTagger.load( "flair/pos-english")

Spacy part of speech tagger install#

Requires: Flair ( pip install flair) from flair.data import Sentence

0 kommentar(er)

0 kommentar(er)